Documentation/Maemo 5 Developer Guide/Architecture/Multimedia Domain

m (→Gstreamer) |

(→Input Event Sounds: rypo) |

||

| (20 intermediate revisions not shown) | |||

| Line 1: | Line 1: | ||

| - | |||

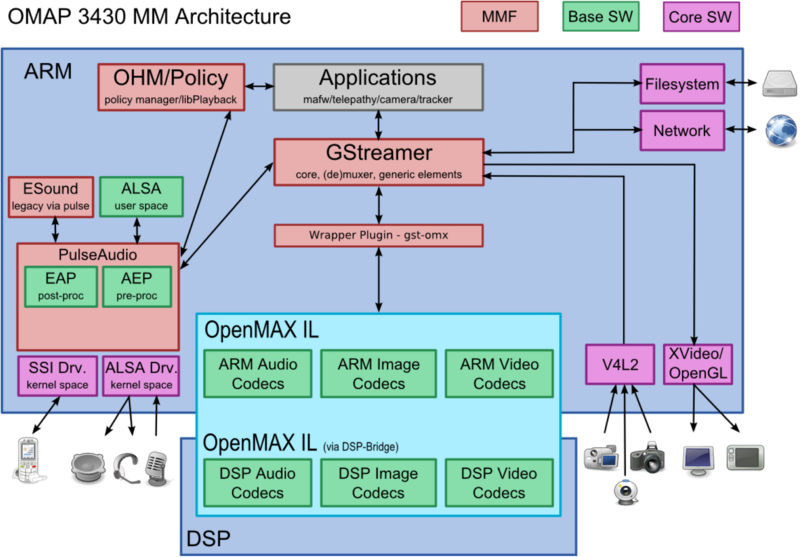

The Multimedia Framework Domain is a combination of a Multimedia Framework (GStreamer), a sound server (PulseAudio), a policy subsystem (OHM and libplayback), codecs and algorithms (for ARM and DSP) , a small number of UIs (status menus and control panel applets) and various libraries (alsa). | The Multimedia Framework Domain is a combination of a Multimedia Framework (GStreamer), a sound server (PulseAudio), a policy subsystem (OHM and libplayback), codecs and algorithms (for ARM and DSP) , a small number of UIs (status menus and control panel applets) and various libraries (alsa). | ||

| Line 8: | Line 7: | ||

| - | [[Image:OMAP_architecture.png|800px]] | + | [[Image:OMAP_architecture.png|800px|alt=Diagram of top-level multimedia architecture|OMAP multimedia architecture]] |

| - | + | Major challenge for the Multimedia framework is to provide the same interfaces like on desktop Linux for different hardware platforms. In order to achieve this objective, the whole audio processing is moved to the ARM side (ALSA / PulseAudio) and OpenMAX IL is introduced to abstract the hardware specific components (codecs and other hardware accelerated elements). | |

| - | Major challenge for the Multimedia framework is to provide the same interfaces like on desktop Linux for different hardware platforms. In order to achieve this objective, the whole audio processing is moved to the ARM side (ALSA / PulseAudio) and OpenMAX IL is introduced to abstract | + | |

| - | + | ||

==Video subsystem== | ==Video subsystem== | ||

| - | [[Image:mmf_video_decomposition.png]] | + | [[Image:mmf_video_decomposition.png|frame|center|alt=UML diagram of video subsystem|Video subsystem]] |

===Video Codecs=== | ===Video Codecs=== | ||

| + | |||

Most of the video encoders and decoders are wrapped under the OpenMAX IL interface [OMAX], which abstracts the codec specific implementation (which in general can run on ARM or DSP). All the OpenMAX IL video codecs will be running on the DSP side in order to best exploit the HW acceleration provided by the OMAP 3430 platform. The DSP based OpenMAX IL components are loaded by TIs IL-Core, which in turn uses LCML and the DSP-Bridge. The ARM based OpenMAX IL are loaded via Bellagio IL-Core. Both IL-Cores are used by the gst-openmax bridge. The GStreamer framework resides on the ARM side. | Most of the video encoders and decoders are wrapped under the OpenMAX IL interface [OMAX], which abstracts the codec specific implementation (which in general can run on ARM or DSP). All the OpenMAX IL video codecs will be running on the DSP side in order to best exploit the HW acceleration provided by the OMAP 3430 platform. The DSP based OpenMAX IL components are loaded by TIs IL-Core, which in turn uses LCML and the DSP-Bridge. The ARM based OpenMAX IL are loaded via Bellagio IL-Core. Both IL-Cores are used by the gst-openmax bridge. The GStreamer framework resides on the ARM side. | ||

| - | Video post processing is performed on DSS screen accelerator. DSS is used to do the colorspace conversion, the scaling and composition, including overlays. A separate external graphics accelerator is used to refresh the screen. In case of need (complicated use cases) the scaling and colorspace conversion can be done on the ARM side as well, but that is not recommened as it is not optimized. | + | Video post processing is performed on DSS screen accelerator. DSS is used to do the colorspace conversion, the scaling and composition, including overlays. A separate external graphics accelerator is used to refresh the screen. In case of need (complicated use cases) the scaling and colorspace conversion can be done on the ARM side as well, but that is not recommened as it is not optimized. A/V synchronization is done on the ARM, using an audio clock that is based on information from the audio interface. |

| - | A/V synchronization is done on the ARM, using an audio clock that is based on information from the audio interface. | + | |

The communication between ARM and DSP software is provided by the TI DSP bridge. Any messages or data buffers exchanged between the ARM and DSP go through it. This layer can be regarded as transparent from Multimedia Architecture point of view and hence it will not be described in this document. | The communication between ARM and DSP software is provided by the TI DSP bridge. Any messages or data buffers exchanged between the ARM and DSP go through it. This layer can be regarded as transparent from Multimedia Architecture point of view and hence it will not be described in this document. | ||

===GStreamer=== | ===GStreamer=== | ||

| + | |||

GStreamer is a crossplatform media framework, covering most multimedia applications use cases from playback, to streaming and imaging. It is a huge collection of objects, interfaces, libraries and plugins. From the application point of view it is just one utility library that can be used by applications to process media streams. The library interface is actually a facade to a versatile collection of dynamic modules that implement the actual functionality. GStreamer core hides the complexity of timing issues, synchronization, buffering, threading, streaming and other functionalities that are needed to produce usable media application. | GStreamer is a crossplatform media framework, covering most multimedia applications use cases from playback, to streaming and imaging. It is a huge collection of objects, interfaces, libraries and plugins. From the application point of view it is just one utility library that can be used by applications to process media streams. The library interface is actually a facade to a versatile collection of dynamic modules that implement the actual functionality. GStreamer core hides the complexity of timing issues, synchronization, buffering, threading, streaming and other functionalities that are needed to produce usable media application. | ||

| Line 41: | Line 39: | ||

*GStreamer provides good modularity and flexibility. Hence, building applications on GStreamer in short time is possible. | *GStreamer provides good modularity and flexibility. Hence, building applications on GStreamer in short time is possible. | ||

*GStreamer is LGPL and it allows Multimedia Project to combine GStreamer with proprietary software. | *GStreamer is LGPL and it allows Multimedia Project to combine GStreamer with proprietary software. | ||

| - | |||

====Public interfaces provided by GStreamer==== | ====Public interfaces provided by GStreamer==== | ||

| - | {| | + | |

| - | | | + | {| class="wikitable" |

| + | |+ Public interfaces provided by GStreamer | ||

|- | |- | ||

| - | + | ! Interface name !! Description | |

|- | |- | ||

| - | | | + | | GStreamer API || Interface for Multimedia applications, VOIP etc |

|- | |- | ||

| - | | | + | | <code>playbin2</code> || Recommended high level element for playback. |

|- | |- | ||

| - | | | + | | <code>uricodebin</code> || Recommended high level element for decoding. |

|- | |- | ||

| - | |camerabin||Recommended high level element for camera application. | + | | <code>tagreadbin</code> || Recommended high level element for fast metadata reading. |

| + | |- | ||

| + | | <code>camerabin</code> || Recommended high level element for camera application. | ||

|} | |} | ||

===OpenMAX IL=== | ===OpenMAX IL=== | ||

| + | |||

OpenMAX is an effort to provide an industry standard for a multimedia API. The standard defines 3 layers – OpenMAX DL (Development Layer), OpenMAX IL (Integration Layer) and OpenMAX AL (Application Layer). DL is a vendor specific and optional component. IL is the layer that interfaces with IL components (e.g. codecs). We will integrate TI IL-Core for the DSP components and Bellagio IL-Core for ARM components. Neither of the cores uses DL. | OpenMAX is an effort to provide an industry standard for a multimedia API. The standard defines 3 layers – OpenMAX DL (Development Layer), OpenMAX IL (Integration Layer) and OpenMAX AL (Application Layer). DL is a vendor specific and optional component. IL is the layer that interfaces with IL components (e.g. codecs). We will integrate TI IL-Core for the DSP components and Bellagio IL-Core for ARM components. Neither of the cores uses DL. | ||

We use GStreamer’s gomx module to transparently make OpenMAX IL components available to any GStreamer application. | We use GStreamer’s gomx module to transparently make OpenMAX IL components available to any GStreamer application. | ||

====TI OpenMAX IL Core==== | ====TI OpenMAX IL Core==== | ||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| + | ;Purpose | ||

| + | : OpenMAX IL Core implementation for DSP. | ||

| + | ;Responsibilities | ||

| + | : Provides media handling services. | ||

| + | ;License | ||

| + | : TI | ||

====Bellagio OpenMAX IL Core==== | ====Bellagio OpenMAX IL Core==== | ||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| + | ;Purpose | ||

| + | : Generic OpenMAX IL Core implementation (used on ARM side) | ||

| + | ;Responsibilities | ||

| + | : Provides media handling services. | ||

| + | ;License | ||

| + | : LGPL | ||

==Imaging subsystem== | ==Imaging subsystem== | ||

| + | |||

The multimedia framework also provides support for imaging applications. The subsystem is illustrated below. | The multimedia framework also provides support for imaging applications. The subsystem is illustrated below. | ||

| - | [[Image:Imaging_decomposition.png|800px]] | + | [[Image:Imaging_decomposition.png|800px|alt=UML diagram of imaging subsystem|Imaging subsystem]] |

===Camera Source=== | ===Camera Source=== | ||

| - | The GstV4L2CamSrc is a fork of the GstV4l2Src plugin. The reason for the fork is that the original plugin has lots of extra code for handing various V4L2 devices (such as tuner | + | |

| + | The <code>GstV4L2CamSrc</code> is a fork of the <code>GstV4l2Src</code> plugin. The reason for the fork is that the original plugin has lots of extra code for handing various V4L2 devices (such as tuner cards) and that made the code quite complex. | ||

====GStreamer V4L2 Camera Source==== | ====GStreamer V4L2 Camera Source==== | ||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| + | ;Purpose | ||

| + | : Image and Video capture | ||

| + | ;Responsibilities | ||

| + | : Capture RAW and YUV image | ||

| + | ;License | ||

| + | : LGPL | ||

| + | ;Packages | ||

| + | : [http://maemo.org/packages/view/gstreamer0.10-plugins-camera/ gstreamer0.10-plugins-camera] | ||

===Image Codecs and Filters=== | ===Image Codecs and Filters=== | ||

| - | The image encoders and decoders are wrapped under the OpenMAX IL interface | + | |

| + | The image encoders and decoders are wrapped under the OpenMAX IL interface (OMAX), which abstracts the codec specific implementation (which in general can run on ARM or DSP). All the image codecs will be running on the DSP side to best exploit the HW acceleration provided by the OMAP 3430 platform. | ||

The post processing filters needed for the camera application will be implemented as GStreamer elements and will run on the DSP. | The post processing filters needed for the camera application will be implemented as GStreamer elements and will run on the DSP. | ||

| + | ===Camera Daemon=== | ||

| - | |||

The camera daemon is holding imaging specific extensions to the v4l2 interface. Specifically it runs the so called 3A algorithms – AutoFocus (AF), AutoWhitebalance (AWB) and AutoExposure (AE). | The camera daemon is holding imaging specific extensions to the v4l2 interface. Specifically it runs the so called 3A algorithms – AutoFocus (AF), AutoWhitebalance (AWB) and AutoExposure (AE). | ||

| - | + | ;Purpose | |

| - | + | : Control imaging specific aspect not yet covered by standard v4l2 | |

| - | + | ;Responsibilities | |

| - | + | : Runs 3A algorithms | |

| - | + | ;License | |

| - | + | : Nokia | |

| - | + | ||

| - | + | ||

| - | + | ||

| + | ====Public interface provided by camera daemon==== | ||

| - | = | + | {| class="wikitable" |

| - | + | |+ Public interface provided by camera daemon | |

| - | + | |- | |

| + | ! Interface name !! Description | ||

|- | |- | ||

| - | |V4L2 ioctl||Extensions for V4L2 protocol | + | | V4L2 ioctl || Extensions for V4L2 protocol |

|} | |} | ||

| - | |||

==Audio Subsystem== | ==Audio Subsystem== | ||

| - | [[Image:Audio_decomposition.png]] | + | [[Image:Audio_decomposition.png|frame|center|alt=UML diagram of audio subsystem|audio subsystem]] |

| Line 152: | Line 151: | ||

Alarm is played through the loudspeaker even if headphones are connected and can’t be mixed to other sounds. This is a UI requirement. | Alarm is played through the loudspeaker even if headphones are connected and can’t be mixed to other sounds. This is a UI requirement. | ||

| - | |||

===Audio Codecs=== | ===Audio Codecs=== | ||

| - | |||

| + | Most of the audio encoders and decoders are wrapped under the OpenMAX IL interface (OMAX), which abstracts the codec specific implementation (which in general can run on ARM or DSP). Unless a different solution is needed (due e.g. to sourcing problems, performance requirements or to fulfill some specific use cases) all the audio codecs will be running on the ARM side to simplify the audio architecture and to avoid the additional latency and load over the data path due to the routing of the audio data first to DSP and then back to ARM. | ||

===ALSA library=== | ===ALSA library=== | ||

| + | |||

ALSA (Advanced Linux Sound Architecture) is a standard audio interface for Linux applications. | ALSA (Advanced Linux Sound Architecture) is a standard audio interface for Linux applications. | ||

| - | + | ;Purpose | |

| - | + | : It can be used by conventional Linux applications to play/record raw audio | |

| - | + | ;Responsibilities | |

| - | + | : Provides a transparent access to audio driver | |

| - | + | ;License | |

| - | + | : GPL/LGPL | |

| - | + | ;Packages | |

| - | + | : [http://maemo.org/packages/view/alsa-utils/ alsa-utils] | |

| - | + | ||

| - | + | ||

| - | + | ||

| + | ====Public interface provided by ALSA library==== | ||

| - | = | + | {| class="wikitable" |

| - | + | |+ Publix interface provided by ALSA library | |

| - | + | |- | |

| + | ! Interface name !! Description | ||

|- | |- | ||

| - | |ALSA||Interface for Conventional Linux Audio Applications. | + | | ALSA|| Interface for Conventional Linux Audio Applications. |

|} | |} | ||

| + | ===PulseAudio=== | ||

| - | |||

PulseAudio is a networked sound server, similar in theory to the Enlightened Sound Daemon (ESound). PulseAudio is however much more advanced and has numerous features: | PulseAudio is a networked sound server, similar in theory to the Enlightened Sound Daemon (ESound). PulseAudio is however much more advanced and has numerous features: | ||

*Software mixing of multiple audio streams, bypassing any restrictions the hardware has. | *Software mixing of multiple audio streams, bypassing any restrictions the hardware has. | ||

| Line 191: | Line 189: | ||

*PulseAudio comes with many plugin modules. | *PulseAudio comes with many plugin modules. | ||

| - | + | ;Purpose | |

| - | + | : It can be used by conventional Linux applications to play/record raw audio | |

| - | + | ;Responsibilities | |

| - | + | : Provides a transparent access to audio driver and performs audio mixing and sample rate conversion. | |

| - | + | ;License | |

| - | + | : GPL/LGPL | |

| - | + | ;Packages | |

| - | + | : [http://maemo.org/packages/view/pulseaudio/ pulseaudio] [http://maemo.org/packages/view/pulseaudio-utils/ pulseaudio-utils] | |

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

====Public interface provided by PulseAudio==== | ====Public interface provided by PulseAudio==== | ||

| - | {| | + | |

| - | | | + | {| class="wikitable" |

| + | |+ Public interface provided by PulseAudio | ||

|- | |- | ||

| - | + | ! Interface name !! Description | |

|- | |- | ||

| - | |PulseAudio||Internal Interface for Audio Applications (e.g. used by the GStreamer Pulse Sink and Source) | + | | ESound || ESD (Enlightened Sound Daemon) is a standard audio interface for Linux applications.<br/>Note: the interface is provided for backwards compatibility only. No new software should use it anymore. |

| + | |- | ||

| + | | PulseAudio || Internal Interface for Audio Applications (e.g. used by the GStreamer Pulse Sink and Source) | ||

|} | |} | ||

| - | |||

===EAP and AEP=== | ===EAP and AEP=== | ||

| + | |||

The EAP (Entertainment Audio Platform) package is used for audio post-processing (music DRC and stereo widening). | The EAP (Entertainment Audio Platform) package is used for audio post-processing (music DRC and stereo widening). | ||

AEP (Audio Enhancements Package) is a full duplex speech audio enhancement package including echo cancellation, background noise suppression, DRC, AGC, etc. | AEP (Audio Enhancements Package) is a full duplex speech audio enhancement package including echo cancellation, background noise suppression, DRC, AGC, etc. | ||

Both EAP and AEP are implemented as a PulseAudio module. | Both EAP and AEP are implemented as a PulseAudio module. | ||

| - | + | ====licence==== | |

| + | Nokia | ||

===FMTX Middleware=== | ===FMTX Middleware=== | ||

| - | |||

| - | The wire of the headset acts as an antenna, boosting fmtx transmission power over allowed limits. Therefore the daemon is monitoring plugged devices and powers the transmitter down, if the headset is | + | FMTX middleware provides a daemon for controlling the [[N900 FM radio transmitter|FM Transmitter]]. The daemon listens to commands from clients via dbus system interface. The frequency of the transmitter is controlled via Video4Linux2 interface. Note that the transmitter must be unmuted before changing frequency. This is because the device is muted by default and when the device is muted, it's not powered. Other settings are controlled by sysfs files in directory <code>/sys/bus/i2c/devices/2-0063/</code>. |

| + | |||

| + | The wire of the headset acts as an antenna, boosting fmtx transmission power over allowed limits. Therefore the daemon is monitoring plugged devices and powers the transmitter down, if the headset is connected. | ||

GConf: system/fmtx/: | GConf: system/fmtx/: | ||

*Bool enabled | *Bool enabled | ||

*Int frequency | *Int frequency | ||

| + | ==== licence ==== | ||

| + | Nokia | ||

===Audio/ Video Synchronization=== | ===Audio/ Video Synchronization=== | ||

| + | |||

The audio / video synchronization is done using the standard mechanisms built-in to the GStreamer framework. This requires that a clock source is available. The clock must be such that it stays in sync with the audio HW. Like in normal Linux desktops, this is achieved by exporting the interrupts from the audio chip through ALSA and corresponding GStreamer element. | The audio / video synchronization is done using the standard mechanisms built-in to the GStreamer framework. This requires that a clock source is available. The clock must be such that it stays in sync with the audio HW. Like in normal Linux desktops, this is achieved by exporting the interrupts from the audio chip through ALSA and corresponding GStreamer element. | ||

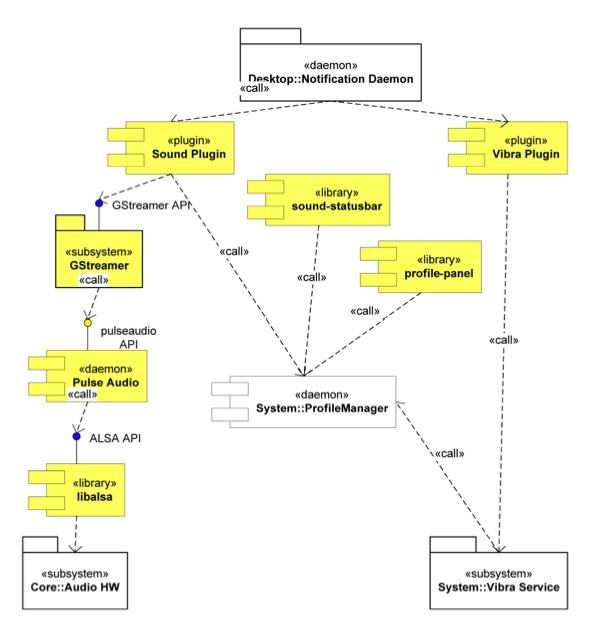

==Notification subsystem== | ==Notification subsystem== | ||

| + | |||

MMF delivers parts of the notification mechanism. The subsytem is illustrated below: | MMF delivers parts of the notification mechanism. The subsytem is illustrated below: | ||

| - | [[Image:Notification_decomposition.png]] | + | [[Image:Notification_decomposition.png|frame|center|alt=UML diagram of notification subsystem|Notification subsystem]] |

===Notification Plugins=== | ===Notification Plugins=== | ||

| + | |||

Two notification plugins are provided, | Two notification plugins are provided, | ||

*Sound notification plugin: Plays events and notification sounds | *Sound notification plugin: Plays events and notification sounds | ||

| Line 245: | Line 249: | ||

===Input Event Sounds=== | ===Input Event Sounds=== | ||

| - | |||

| + | The input event sounds module is using the Xserver xtest (http://www.xfree86.org/current/xtestlib.pdf) extension to produce input event feedback via libcanberra. The input-sound module is started with the XSession as a separate process. | ||

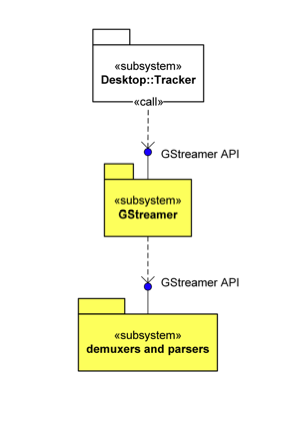

==Metadata Subsystem== | ==Metadata Subsystem== | ||

| + | |||

The multimedia framework supports the search engine when indexing media files. The subsystem is illustrated below: | The multimedia framework supports the search engine when indexing media files. The subsystem is illustrated below: | ||

| - | [[Image:Metadata_decomposition.png]] | + | [[Image:Metadata_decomposition.png|frame|center|alt=UML diagram of metadata subsystem|Metadata subsystem]] |

===Decodebin2/TagreadBin=== | ===Decodebin2/TagreadBin=== | ||

| + | |||

The desktop search (Tracker) can use high level GStreamer components (decodebin2/tagreadbin) to gather metadata from all supported media files. Tagreadbin can provide better performance than playbin2 by avoiding to plug decoders and utilize special codepath in parsers and demuxer for getting only metadata. | The desktop search (Tracker) can use high level GStreamer components (decodebin2/tagreadbin) to gather metadata from all supported media files. Tagreadbin can provide better performance than playbin2 by avoiding to plug decoders and utilize special codepath in parsers and demuxer for getting only metadata. | ||

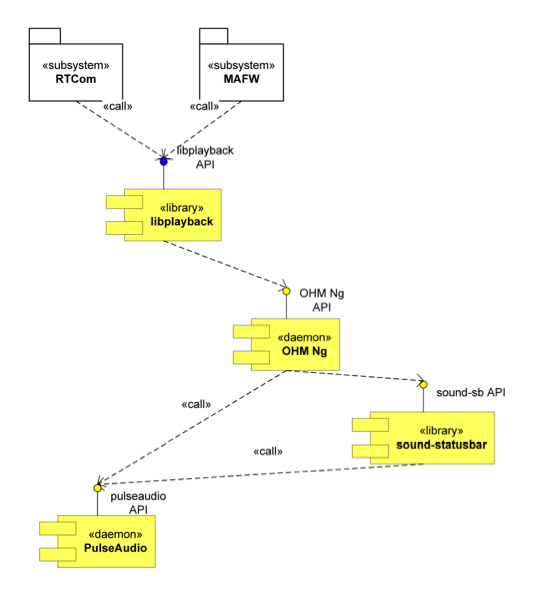

| + | ==Policy Subsystem== | ||

| - | |||

It is neither user friendly nor always possible to run several multimedia use cases at the same time. To provide a predictable and stable behavior, multimedia components interact with a system wide policy component to keep concurrent multimedia use cases under control. The subsystem is illustrated below. The policy engine is based on the OHM framework. It is dynamically configurable with scripting and a prolog based rule database. | It is neither user friendly nor always possible to run several multimedia use cases at the same time. To provide a predictable and stable behavior, multimedia components interact with a system wide policy component to keep concurrent multimedia use cases under control. The subsystem is illustrated below. The policy engine is based on the OHM framework. It is dynamically configurable with scripting and a prolog based rule database. | ||

| - | [[Image:Policy_decomposition.png]] | + | [[Image:Policy_decomposition.png|frame|center|alt=UML diagram of policy subsystem|Policy subsystem]] |

===libplayback=== | ===libplayback=== | ||

| + | |||

libplayback is a client API that allows an application to declare its playback state. The library uses D-Bus to talk to a central component that manages the states. Media applications can use the API to synchronize their playback state. | libplayback is a client API that allows an application to declare its playback state. The library uses D-Bus to talk to a central component that manages the states. Media applications can use the API to synchronize their playback state. | ||

| - | + | ;Purpose | |

| - | + | : It can be used by media application to synchronize their playback state. This includes audio playback when the silent profile is active. | |

| - | + | ;Responsibilities | |

| - | + | : Provides an interface for media playback management | |

| - | + | ;License | |

| - | + | : Nokia | |

| - | + | ;Packages | |

| - | + | : [http://maemo.org/packages/view/libplayback-1-0/ libplayback] | |

| - | + | ;Documentation and example code | |

| - | + | : [http://maemo.org/api_refs/5.0/5.0-final/libplayback-1/ Documentation] | |

| - | + | : [http://talk.maemo.org/showthread.php?t=67157 Thread on libplayback] | |

| + | ====Public interface provided by libplayback==== | ||

| - | + | {| class="wikitable" | |

| - | {| | + | |+ Public interface provided by libplayback |

| - | | | + | |

|- | |- | ||

| - | | | + | ! Interface name !! Description |

| + | |- | ||

| + | |D-Bus API||Interface for requesting/ getting a playback state. | ||

|} | |} | ||

| - | |||

===OHM Ng=== | ===OHM Ng=== | ||

| - | |||

| - | + | OHM Ng is a extension on top of OHM (the open hardware manager project). OHM Ng provides the policy management for the system. In Fremantle, system policy only deals with multimedia resources. | |

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| + | ;Purpose | ||

| + | : System policy management | ||

| + | ;Responsibilities | ||

| + | : <ul><li>Receives policy requests</li><li>Evaluates policy rules</li><li>Notify applications of policy decisions</li><li>Use available enforcement points</li></ul> | ||

| + | ;License | ||

| + | : Nokia | ||

| + | ;Packages | ||

| + | : [http://maemo.org/packages/view/ohm/ ohm], [http://maemo.org/packages/view/ohm-plugin-core0/ ohm-plugins-core0], [http://maemo.org/packages/view/ohm-dev/ ohm-dev], [http://maemo.org/packages/view/ohm-dbg/ ohm-dbg] (includes some enforcement point plugins and libraries) | ||

===PulseAudio Policy Enforcement Point=== | ===PulseAudio Policy Enforcement Point=== | ||

| + | |||

PulseAudio plugin to manage volume levels, re-route streams and eventually forcefully shutdown streams. | PulseAudio plugin to manage volume levels, re-route streams and eventually forcefully shutdown streams. | ||

| - | + | ;Purpose | |

| - | + | : Monitors headsets/ headphones connection status | |

| - | + | ;Responsibilities | |

| - | + | : <ul><li>Sets the correct audio routing according to the headsets / headphones connection status</li><li>Sets the volume levels at device start-up and ensures it is put to a safe level when headphones are used</li><li>Sets the correct tuning to Audio Post Processing and Audio Enhancement Package</li></ul> | |

| - | + | ;License | |

| - | + | : Nokia | |

| - | License | + | ;Packages |

| - | + | : [http://maemo.org/packages/view/ohm/ ohm], [http://maemo.org/packages/view/ohm-plugin-core0/ ohm-plugins-core0], [http://maemo.org/packages/view/ohm-dev/ ohm-dev], [http://maemo.org/packages/view/ohm-dbg/ ohm-dbg] (includes some enforcement point plugins and libraries) | |

| - | + | ||

| - | + | [[Category:Media]] | |

| + | [[Category:Documentation]] | ||

| + | [[Category:Fremantle]] | ||

Latest revision as of 17:26, 30 September 2014

The Multimedia Framework Domain is a combination of a Multimedia Framework (GStreamer), a sound server (PulseAudio), a policy subsystem (OHM and libplayback), codecs and algorithms (for ARM and DSP) , a small number of UIs (status menus and control panel applets) and various libraries (alsa).

It provides audio/video playback, streaming services, imaging and metadata gathering support. More in general, it takes care of the actual audio/video data handling (retrieval, demuxing, decoding and encoding, seeking …).

The clients of the Multimedia framework are the Multimedia Applications, RTC subsystem, and all the Linux applications/entities which produce sounds through the PulseAudio, ESD or ALSA interfaces and capture images from the V4L2 interface. The Multimedia Framework subsystem is partially located on the user space of Linux and on the DSP.

Major challenge for the Multimedia framework is to provide the same interfaces like on desktop Linux for different hardware platforms. In order to achieve this objective, the whole audio processing is moved to the ARM side (ALSA / PulseAudio) and OpenMAX IL is introduced to abstract the hardware specific components (codecs and other hardware accelerated elements).

Contents |

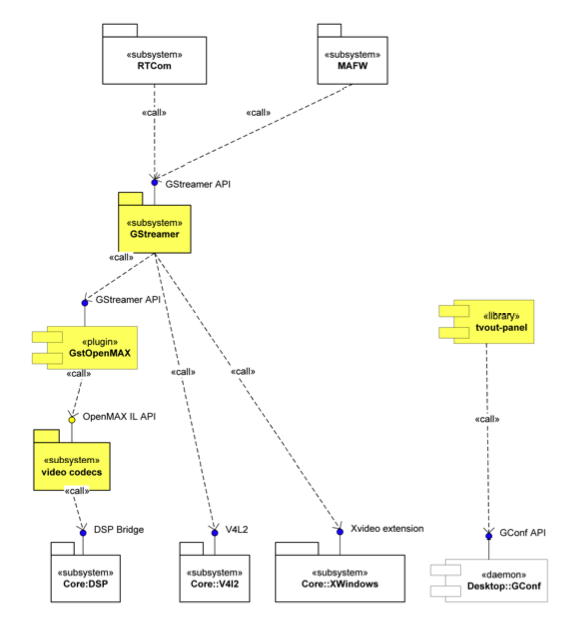

[edit] Video subsystem

[edit] Video Codecs

Most of the video encoders and decoders are wrapped under the OpenMAX IL interface [OMAX], which abstracts the codec specific implementation (which in general can run on ARM or DSP). All the OpenMAX IL video codecs will be running on the DSP side in order to best exploit the HW acceleration provided by the OMAP 3430 platform. The DSP based OpenMAX IL components are loaded by TIs IL-Core, which in turn uses LCML and the DSP-Bridge. The ARM based OpenMAX IL are loaded via Bellagio IL-Core. Both IL-Cores are used by the gst-openmax bridge. The GStreamer framework resides on the ARM side.

Video post processing is performed on DSS screen accelerator. DSS is used to do the colorspace conversion, the scaling and composition, including overlays. A separate external graphics accelerator is used to refresh the screen. In case of need (complicated use cases) the scaling and colorspace conversion can be done on the ARM side as well, but that is not recommened as it is not optimized. A/V synchronization is done on the ARM, using an audio clock that is based on information from the audio interface.

The communication between ARM and DSP software is provided by the TI DSP bridge. Any messages or data buffers exchanged between the ARM and DSP go through it. This layer can be regarded as transparent from Multimedia Architecture point of view and hence it will not be described in this document.

[edit] GStreamer

GStreamer is a crossplatform media framework, covering most multimedia applications use cases from playback, to streaming and imaging. It is a huge collection of objects, interfaces, libraries and plugins. From the application point of view it is just one utility library that can be used by applications to process media streams. The library interface is actually a facade to a versatile collection of dynamic modules that implement the actual functionality. GStreamer core hides the complexity of timing issues, synchronization, buffering, threading, streaming and other functionalities that are needed to produce usable media application.

When using GStreamer as a media playback framework, application developer is given a set of tools and building blocks for implementing the decoding engine. The framework provides actual decoding and data streaming services, but it is up to the user how to use these tools. Application logic and utilizing the services of GStreamer are tasks of the developer.

GStreamer was chosen as a base framework on the ARM side because of the following reasons:

- GStreamer is a development framework for creating applications like media players, video editors, streaming media broadcasters and so on.

- GStreamer’s development started back in 1999 and it is relatively a long living open source project. The releases are frequent so far.

- There are many famous open source codecs supported by GStreamer and some example players based on GStreamer are available.

- GStreamer developers have a close relationship with the GNOME community, which is a potential advantage later on.

- GStreamer is written in C, which can be an advantage in embedded systems.

- GStreamer provides good modularity and flexibility. Hence, building applications on GStreamer in short time is possible.

- GStreamer is LGPL and it allows Multimedia Project to combine GStreamer with proprietary software.

[edit] Public interfaces provided by GStreamer

| Interface name | Description |

|---|---|

| GStreamer API | Interface for Multimedia applications, VOIP etc |

playbin2 | Recommended high level element for playback. |

uricodebin | Recommended high level element for decoding. |

tagreadbin | Recommended high level element for fast metadata reading. |

camerabin | Recommended high level element for camera application. |

[edit] OpenMAX IL

OpenMAX is an effort to provide an industry standard for a multimedia API. The standard defines 3 layers – OpenMAX DL (Development Layer), OpenMAX IL (Integration Layer) and OpenMAX AL (Application Layer). DL is a vendor specific and optional component. IL is the layer that interfaces with IL components (e.g. codecs). We will integrate TI IL-Core for the DSP components and Bellagio IL-Core for ARM components. Neither of the cores uses DL. We use GStreamer’s gomx module to transparently make OpenMAX IL components available to any GStreamer application.

[edit] TI OpenMAX IL Core

- Purpose

- OpenMAX IL Core implementation for DSP.

- Responsibilities

- Provides media handling services.

- License

- TI

[edit] Bellagio OpenMAX IL Core

- Purpose

- Generic OpenMAX IL Core implementation (used on ARM side)

- Responsibilities

- Provides media handling services.

- License

- LGPL

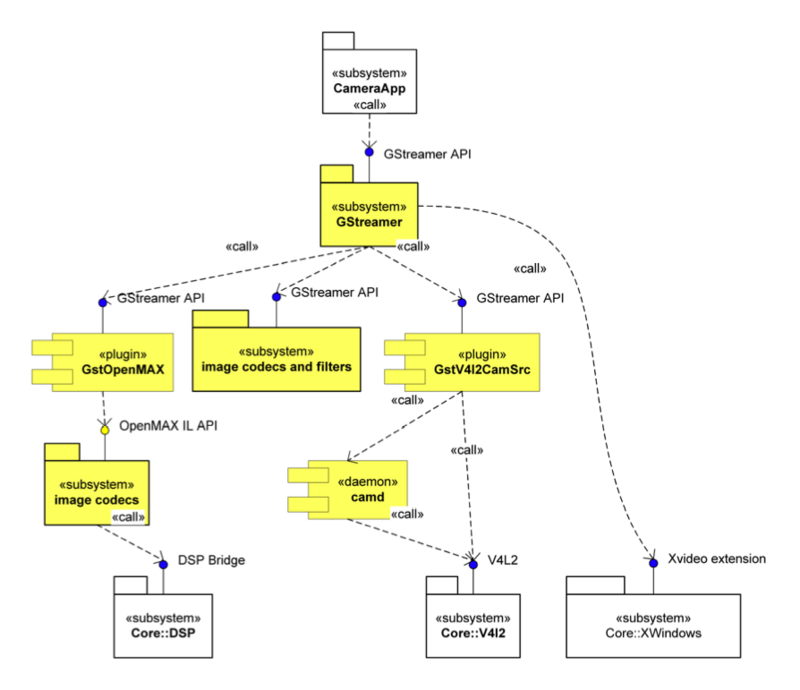

[edit] Imaging subsystem

The multimedia framework also provides support for imaging applications. The subsystem is illustrated below.

[edit] Camera Source

The GstV4L2CamSrc is a fork of the GstV4l2Src plugin. The reason for the fork is that the original plugin has lots of extra code for handing various V4L2 devices (such as tuner cards) and that made the code quite complex.

[edit] GStreamer V4L2 Camera Source

- Purpose

- Image and Video capture

- Responsibilities

- Capture RAW and YUV image

- License

- LGPL

- Packages

- gstreamer0.10-plugins-camera

[edit] Image Codecs and Filters

The image encoders and decoders are wrapped under the OpenMAX IL interface (OMAX), which abstracts the codec specific implementation (which in general can run on ARM or DSP). All the image codecs will be running on the DSP side to best exploit the HW acceleration provided by the OMAP 3430 platform. The post processing filters needed for the camera application will be implemented as GStreamer elements and will run on the DSP.

[edit] Camera Daemon

The camera daemon is holding imaging specific extensions to the v4l2 interface. Specifically it runs the so called 3A algorithms – AutoFocus (AF), AutoWhitebalance (AWB) and AutoExposure (AE).

- Purpose

- Control imaging specific aspect not yet covered by standard v4l2

- Responsibilities

- Runs 3A algorithms

- License

- Nokia

[edit] Public interface provided by camera daemon

| Interface name | Description |

|---|---|

| V4L2 ioctl | Extensions for V4L2 protocol |

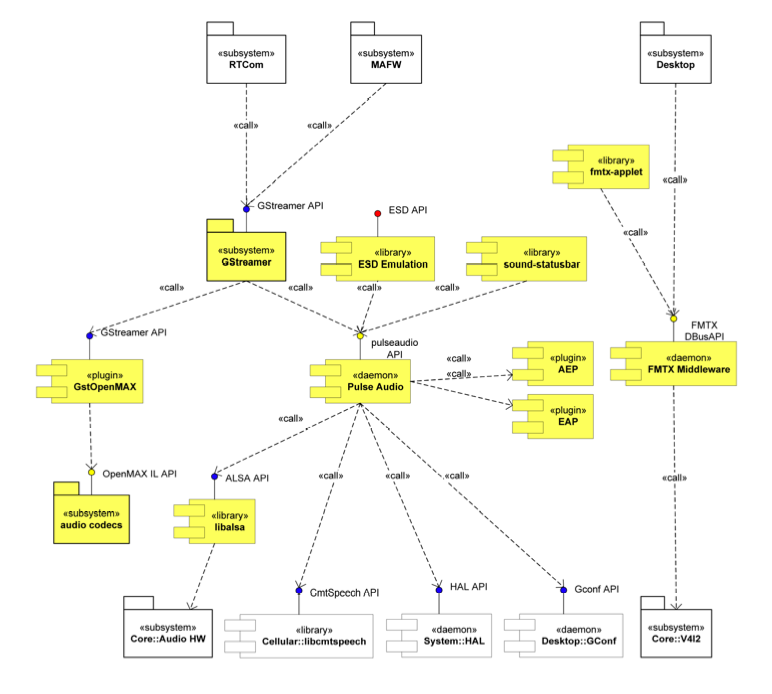

[edit] Audio Subsystem

The audio subsystem has to take into account some requirements coming from hardware characteristics which influence the design of the software subsystem:

- Audio signals can be outputed through the IHF or through wired/Bluetooth headsets

- The loudspeaker and headphones share the same audio signal

- Hardware volume control is provided for both headset and for IHF, but not for microphones.

- Microphone selection between IHF and headset is done using an analog switch, no hardware amplification control for either.

These aspects led to the following design choices:

- Audio signal routing is done using headphone and IHF amplifier volume control

- Microphone AGC is implemented

- Master volume control will correspond to

- IHF amplifier volume control when IHF is in use

- Headphone amplifier volume control when headset/headphone is in use

- BT headset/headphone volume setting when BT is in use

In addition, Audio Post-Processor is always enabled. It needs different tunings for IHF, wired headset (assumed to be HS-48 like), BT headset and generic headphone.

Alarm is played through the loudspeaker even if headphones are connected and can’t be mixed to other sounds. This is a UI requirement.

[edit] Audio Codecs

Most of the audio encoders and decoders are wrapped under the OpenMAX IL interface (OMAX), which abstracts the codec specific implementation (which in general can run on ARM or DSP). Unless a different solution is needed (due e.g. to sourcing problems, performance requirements or to fulfill some specific use cases) all the audio codecs will be running on the ARM side to simplify the audio architecture and to avoid the additional latency and load over the data path due to the routing of the audio data first to DSP and then back to ARM.

[edit] ALSA library

ALSA (Advanced Linux Sound Architecture) is a standard audio interface for Linux applications.

- Purpose

- It can be used by conventional Linux applications to play/record raw audio

- Responsibilities

- Provides a transparent access to audio driver

- License

- GPL/LGPL

- Packages

- alsa-utils

[edit] Public interface provided by ALSA library

| Interface name | Description |

|---|---|

| ALSA | Interface for Conventional Linux Audio Applications. |

[edit] PulseAudio

PulseAudio is a networked sound server, similar in theory to the Enlightened Sound Daemon (ESound). PulseAudio is however much more advanced and has numerous features:

- Software mixing of multiple audio streams, bypassing any restrictions the hardware has.

- Sample rate conversion of audio streams

- Network transparency, allowing an application to play back or record audio on a different machine than the one it is running on.

- Sound API abstraction, alleviating the need for multiple backends in applications to handle the wide diversity of sound systems out there.

- Generic hardware abstraction, giving the possibility of doing things like individual volumes per application.

- PulseAudio comes with many plugin modules.

- Purpose

- It can be used by conventional Linux applications to play/record raw audio

- Responsibilities

- Provides a transparent access to audio driver and performs audio mixing and sample rate conversion.

- License

- GPL/LGPL

- Packages

- pulseaudio pulseaudio-utils

[edit] Public interface provided by PulseAudio

| Interface name | Description |

|---|---|

| ESound | ESD (Enlightened Sound Daemon) is a standard audio interface for Linux applications. Note: the interface is provided for backwards compatibility only. No new software should use it anymore. |

| PulseAudio | Internal Interface for Audio Applications (e.g. used by the GStreamer Pulse Sink and Source) |

[edit] EAP and AEP

The EAP (Entertainment Audio Platform) package is used for audio post-processing (music DRC and stereo widening).

AEP (Audio Enhancements Package) is a full duplex speech audio enhancement package including echo cancellation, background noise suppression, DRC, AGC, etc. Both EAP and AEP are implemented as a PulseAudio module.

[edit] licence

Nokia

[edit] FMTX Middleware

FMTX middleware provides a daemon for controlling the FM Transmitter. The daemon listens to commands from clients via dbus system interface. The frequency of the transmitter is controlled via Video4Linux2 interface. Note that the transmitter must be unmuted before changing frequency. This is because the device is muted by default and when the device is muted, it's not powered. Other settings are controlled by sysfs files in directory /sys/bus/i2c/devices/2-0063/.

The wire of the headset acts as an antenna, boosting fmtx transmission power over allowed limits. Therefore the daemon is monitoring plugged devices and powers the transmitter down, if the headset is connected. GConf: system/fmtx/:

- Bool enabled

- Int frequency

[edit] licence

Nokia

[edit] Audio/ Video Synchronization

The audio / video synchronization is done using the standard mechanisms built-in to the GStreamer framework. This requires that a clock source is available. The clock must be such that it stays in sync with the audio HW. Like in normal Linux desktops, this is achieved by exporting the interrupts from the audio chip through ALSA and corresponding GStreamer element.

[edit] Notification subsystem

MMF delivers parts of the notification mechanism. The subsytem is illustrated below:

[edit] Notification Plugins

Two notification plugins are provided,

- Sound notification plugin: Plays events and notification sounds

- Vibra notification plugin: Emits bursts of vibration and controls the vibration hw.

[edit] Input Event Sounds

The input event sounds module is using the Xserver xtest (http://www.xfree86.org/current/xtestlib.pdf) extension to produce input event feedback via libcanberra. The input-sound module is started with the XSession as a separate process.

[edit] Metadata Subsystem

The multimedia framework supports the search engine when indexing media files. The subsystem is illustrated below:

[edit] Decodebin2/TagreadBin

The desktop search (Tracker) can use high level GStreamer components (decodebin2/tagreadbin) to gather metadata from all supported media files. Tagreadbin can provide better performance than playbin2 by avoiding to plug decoders and utilize special codepath in parsers and demuxer for getting only metadata.

[edit] Policy Subsystem

It is neither user friendly nor always possible to run several multimedia use cases at the same time. To provide a predictable and stable behavior, multimedia components interact with a system wide policy component to keep concurrent multimedia use cases under control. The subsystem is illustrated below. The policy engine is based on the OHM framework. It is dynamically configurable with scripting and a prolog based rule database.

[edit] libplayback

libplayback is a client API that allows an application to declare its playback state. The library uses D-Bus to talk to a central component that manages the states. Media applications can use the API to synchronize their playback state.

- Purpose

- It can be used by media application to synchronize their playback state. This includes audio playback when the silent profile is active.

- Responsibilities

- Provides an interface for media playback management

- License

- Nokia

- Packages

- libplayback

- Documentation and example code

- Documentation

- Thread on libplayback

[edit] Public interface provided by libplayback

| Interface name | Description |

|---|---|

| D-Bus API | Interface for requesting/ getting a playback state. |

[edit] OHM Ng

OHM Ng is a extension on top of OHM (the open hardware manager project). OHM Ng provides the policy management for the system. In Fremantle, system policy only deals with multimedia resources.

- Purpose

- System policy management

- Responsibilities

-

- Receives policy requests

- Evaluates policy rules

- Notify applications of policy decisions

- Use available enforcement points

- License

- Nokia

- Packages

- ohm, ohm-plugins-core0, ohm-dev, ohm-dbg (includes some enforcement point plugins and libraries)

[edit] PulseAudio Policy Enforcement Point

PulseAudio plugin to manage volume levels, re-route streams and eventually forcefully shutdown streams.

- Purpose

- Monitors headsets/ headphones connection status

- Responsibilities

-

- Sets the correct audio routing according to the headsets / headphones connection status

- Sets the volume levels at device start-up and ensures it is put to a safe level when headphones are used

- Sets the correct tuning to Audio Post Processing and Audio Enhancement Package

- License

- Nokia

- Packages

- ohm, ohm-plugins-core0, ohm-dev, ohm-dbg (includes some enforcement point plugins and libraries)

- This page was last modified on 30 September 2014, at 17:26.

- This page has been accessed 71,357 times.